Autonomous Testing: A Complete Guide

What is Autonomous Testing?

Autonomous testing is a software testing approach where tests are completely created, driven, and managed by AI/ML or automation technologies, eliminating the need for human intervention.

The end goal of autonomous testing is to fully streamline the software testing process, enhance its efficiency, while enabling testers to fully focus on strategic activities.

With autonomous testing, the system can function as an independent entity, taking full control over the process of end-to-end testing thanks to intelligent algorithms. The autonomous testing tool can locate and inject the necessary data, analyze it before performing all of the testing activities from Test Management, Test Orchestration to Test Evaluation and Reporting.

Essentially, autonomous testing is a higher level of automation for automation testing. This is a recent shift in the testing industry as AI technologies evolve to be more advanced, enabling us to opening a promising era of human-machine

This tool is also ever-improving, continuously learning from historical test data to evolve its model along with the organization’s specific needs. Even better, it can perform integrated testing to see how the different areas of a codebase fit together as a unified application.

Key Components of Autonomous Testing

There are many ways to harness the power of AI and ML for autonomous testing, and the key to unlocking these capabilities lies in understanding their potential and then creatively integrating them into your everyday testing routines.

Here are 5 key components of an AI-powered autonomous testing system based on the stages of a software testing life cycle:

- Test Planning: AI can assist in analyzing complex software requirements to identify potential ambiguities in the system. For web testing especially, we can leverage this technology to suggest optimal testing strategies based on its analysis of real-time and historical traffic data, selecting only the high-risk areas that should be prioritized for testing. We can configure for all of these AI-generated test case recommendations to be automatically scheduled for execution.

- Test Creation: AI can automatically generate detailed descriptions for manual test cases from requirements, specifications, and even application usage data. With the ever-improving power of the current Generative AIs, we can even create test script in the programming language we need through effective prompt engineering. Test data generation is also easier, as we can simply command the AI to produce a comprehensive dataset following certain criteria we set out, then export it in either CSV or XML format for more efficient data-driven testing.

- Test Management: AI can categorize test cases into certain groups (risk, severity, time to fix, bug type, reproducibility, root cause, areas of impact, etc.) for easier management and prioritization. For test data, AI can also support in management and anonymization, ensuring data privacy compliance.

- Test Execution: AI-driven systems can execute test cases autonomously, including regression testing, which is a highly repetitive area and would benefit tremendously from automation. AI can also autonomously identify broken locators (due to changes in the codebase that were not yet reflected in the test scripts) and fix them to keep the tests running. This is a feature known as Self-healing.

- Debugging: based on patterns and defect logs, AI can intelligently classify bugs and even perform root cause analysis, localize the area where the issue happens, then suggest potential action items to address them.

Another interesting domain where AI capabilities of autonomous testing shines through is visual testing. Traditionally, testers have to rely on their own human visual power to spot UI defects on the website. The approach was that they'll take a screenshot of the expected UI (the baseline image), then they'll compare that to the actual UI in production.

Humans are, after all, humans, and our own eyes may eventually miss visual bugs here and there. That's not to mention the number of screenshots they have to compare with each other. For eCommerce websites having thousands of webpages, manually comparing them is a truly tedious task. Take a moment to spot 3 differences between the 2 images below, and you'll see how long it will take a tester to do manual visual testing across thousands of images.

There are so many issues with manual visual testing and even automated visual testing. Computers flag even the smallest pixel differences as “visual bugs”, while the human eye can't register such a miniscule difference. With AI-powered visual testing, the process is much simpler. They know which bugs are truly impactful on the User Experience, and if configured, can even ignore dynamic zones (i.e. areas that frequently change on the web, such as date, time, or status icons) when comparing screenshots. AI-powered visual regression testing tools can achieve such feats.

Experience AI-powered Visual Testing (Free Trial)

Benefits Of Autonomous Testing

With the capabilities above, autonomous testing can truly supercharge your testing. For now, AI/ML won't be able to replace testers yet, although its impact should be adequately acknowledged. Testers should embrace AI/ML as a powerful tool to 10x their productivity and transform themselves into strategic thinkers that know how to command AI/ML to work for them. Several immediate benefits that testers can gain from autonomous testing include:

- Accelerated testing: now that all of the tedious, repetitive aspects of traditional software testing have been taken care of, we can totally expect testers to test at a highly accelerated rate. For the time being, companies that embrace AI testing will gain a unique competitive advantage in their field, now that they have drastically shortened their time-to-market. Every time they roll out a new update, the AI can immediately generate test scenarios and execute regression test suites, intelligently self-healing any broken test script due to code changes, saving lots of time for QA teams.

- Increased productivity: thanks to the generative power of AI, teams can create test data, test scripts, and test scenarios at scale, at greater levels of customization. Instead of spending hours creating those data points/test scripts from scratch, they can just instruct the AI to do that for them. The level of data comprehensiveness is also higher, allowing QA teams to cover more issues.

- Increased system versatility: an autonomous testing system can easily adapt itself to changes in the product thanks to continuous learning algorithms embedded in its core. Over time, it will be able to generate not just better but also more organization-specific test scenarios/test data. Such adaptability frees organizations of the responsibilities of test maintenance.

- Cost-effectiveness: as companies need fewer resources allocated to testing activities, we can expect higher productivity per resource unit on an organizational level. Although the initial setup and configuration costs are high, and the results might not be immediate since it needs time to learn about the organization's testing needs patterns, the ROI in the long term is totally worth the investment.

- Competitive advantage: all of the benefits above directly translate into competitive advantages for the organization. QA teams can focus on truly critical tasks, while developers gain almost instant feedback for their builds.

→ See how you can Generate Test Code and Explain Code quickly with StudioAssist

Understanding The Concept of Autonomy

To understand the autonomous testing concept more in-depth, we first need to define “autonomy”.

Put simply, autonomy is the extent to which a system can operate, make decisions, and perform tasks without human intervention or guidance. It is an inherent attribute of any system and not exclusive to software.

Autonomy exists on a spectrum, with the lowest level being No Autonomy, where the system completely follows human commands, and therefore humans have to be responsible for all decision-making related to this system. At the highest level - Full Autonomy - the system operates entirely on its own, without any need for intervention from humans.

The same spectrum can totally be applied in a Software Testing context. In fact, in the automotive industry, a benchmark to measure levels of autonomy has long been developed. This benchmark sets out 6 stages of human-machine integration:

- Completely Manual

- Assisted Automation

- Partial Automation

- Integrated Automation

- Intelligent Automation

- Completely Autonomous

We can also set out 6 stages of human-machine integration for the software testing industry

Developing A Benchmark For Autonomous Testing

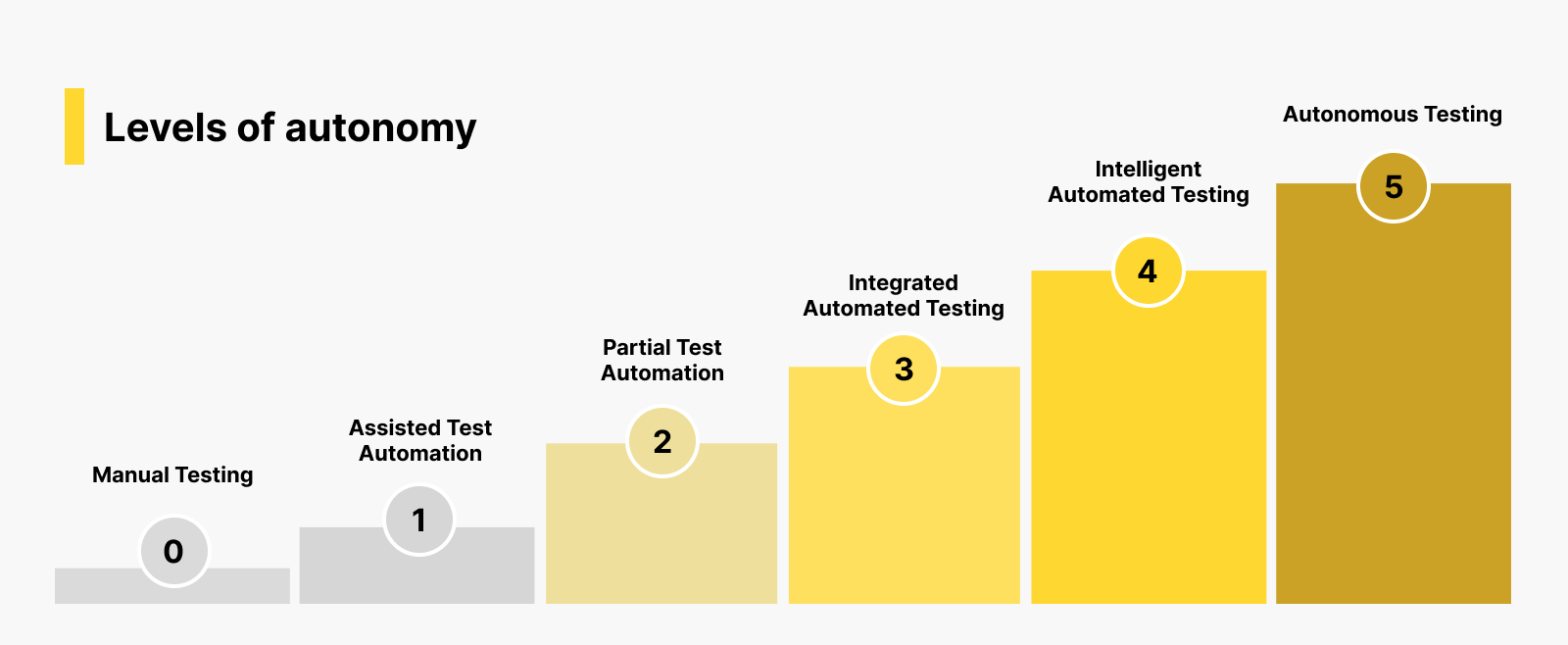

Inspired by the benchmark in the automotive industry, we have developed a benchmark for autonomous testing called the Autonomous Software Testing Model (ASTM).

The ASTM model represents the 6 levels of autonomy, with Level 0 being complete Manual Testing and Level 5 being Autonomous Testing.

- Manual Testing: human testers make all decisions and testing activities

- Assisted Test Automation: automated testing tools or scripts are used to assist human testers. They still have to actively create and maintain those automated test scripts. At this level, humans play a crucial role in test design and test management.

- Partial Test Automation: both humans and computers engage in testing activities and propose potential decision choices, yet the majority of testing decisions are still made by humans.

- Integrated Automated Testing: in this phase, the computer generates a list of decision alternatives, chooses one for action, and proceeds only if the human approves. Alternatively, the human can opt for a different decision alternative for the computer to execute.

- Intelligent Automated Testing: the computer generates decision alternatives, evaluates and selects the optimal one, and performs testing actions accordingly. Human intervention is still an option if necessary.

- Autonomous Testing: the computer assumes complete control over the testing process for the System Under Test (SUT), which encompasses decision-making and the execution of all testing actions. Human intervention is not possible.

Complete autonomous testing is not yet possible, since currently it is only in its infancy. Individual testers and small-scale projects may only have manual testing in their test plan due to the limited availability of resources. The majority if following a hybrid approach where a portion of their test cases are executed automatically thanks to automation testing tools, while the rest are still manually executed to add a human touch to the process.

This means we are at around stage 2 of the ASTM.

At level 2, Assisted Test Automation, the human can decide the testing option, then the tool carries out the testing. Even the leading brands who have implemented AI features to their automated software testing processes still require a certain level of human intervention. A truly autonomous testing system is, indeed, a future that testers around the world are striving for.

However, we are moving towards that future at an unprecedented rate, and many AI testing tools have successfully delivered some features belonging to 3rd level autonomy. The nature of AI/ML technology requires a long time to develop, since they have to learn from gigantic data sources to be able to make statistical connections and give tailored testing recommendations. Given enough time, these autonomous testing tools will eventually be truly autonomous, reaching Stage 5 of the ATSM.

Experience A Pioneering AI-powered Testing Platform

From Manual Testing To Automation Testing To Autonomous Testing

The Autonomous Software Testing Model reflects the evolution of the software testing industry itself.

Starting from the repetitive, tedious, and counter-productive manual testing approach, we have gradually leverage automation technologies to help us offload more and more tasks. Now, AI/ML technologies have become advanced enough to supercharge our testing and drastically enhance all aspects of it.

Aspect | Manual Testing | Automation Testing | Autonomous Testing |

Execution Method | Manual execution by testers | Automated execution using scripts and tools | AI-driven execution and analysis |

Test Cases Creation | Manual creation based on requirements | Test cases created once, can be reused | Test cases generated and adapted automatically |

Speed and Efficiency | Slower and less efficient for repetitive tasks | Faster and more efficient for repetitive tasks | Extremely fast and efficient |

Exploration and Usability Testing | Effective for exploratory and usability testing | Less effective for exploratory and usability testing | Limited effectiveness for exploratory and usability testing |

Skill Dependency | Relies on testers' skills and expertise | Requires scripting and tool expertise | Requires AI/ML expertise for setup and tuning |

Cost | Lower initial investment but potentially higher long-term costs | Higher initial setup and maintenance costs, potentially lower long-term costs | Higher initial setup costs, potentially lower long-term costs |

Adaptability | Ideal for early-stage or evolving projects | Ideal for stable, well-defined projects | Well-suited for stable projects with continuous testing needs |

Challenges On The Path To Autonomous Testing

- Complex Test Scenarios: Not all test scenarios are good candidate for automation, such as usability testing or exploratory testing, which all require human intuition and creativity. For now, we can only rely on manual testing for that, and it is admittedly not easy to have a system that knows how to explore another system that is completely foreign to them to uncover bugs, but achieving autonomous exploratory testing can be an important milestone not just in the software testing field but also the AI field.

- Test Data Management: Ensuring the availability of realistic and diverse test data that reflects real-world conditions can be difficult. Test data generation is actually possible with good prompt engineering, but challenges regarding data privacy and data masking is something organizations must consider.

- AI Model Training: AI models used in autonomous testing need ongoing training and fine-tuning to adapt to evolving applications and changing testing requirements. This requires continuous effort and enormous investment into researching. OpenAI spent around $540 million in 2022 to develop its ground-breaking chatbot ChatGPT, which has been widely leveraged for AI-powered software testing, and yet we are already around Stage 3 of the ASTM.

- Interoperability and Integration: Integrating autonomous testing systems with existing development, testing, and CI/CD pipelines can be complex and will probably require significant customization.

- AI Bias and Accuracy: AI algorithms may introduce biases or inaccuracies in test case generation, execution, or defect detection. Ensuring AI models are fair and reliable is crucial.

No matter what, autonomous testing is critical for a company’s digital transformation, and it will soon become more and more viable when Machine Learning technology grows to be more sophisticated.

Wrapping Up

In short, Autonomous Testing is an ambitious and futuristic endeavor that is guaranteed to disrupt the testing landscape. Yet, the transition can be messy with emerging terminologies, concepts, and discussions, and the adoption of Autonomous Testing can bring a lot of new challenges for us to overcome along with its benefits.

Nevertheless, infusing AI with automated testing tools to create an intelligent, self-adopting testing tool is still a promising effort to help QA teams test better.

Katalon has been a part of this transformational journey. Having integrated AI-powered features into our testing platform, we empower teams to deliver intelligent, scalable tests.

Katalon pioneers the AI testing world and supercharges testers with its unique capabilities:

- StudioAssist: Utilizes ChatGPT to generate test scripts autonomously based on plain language input and promptly provides explanations for the test scripts to ensure comprehension among all stakeholders.

- Katalon GPT-powered manual test case generator: Integrates seamlessly with JIRA by parsing the ticket's description, extracting relevant details about your testing needs, and produces a customized set of thorough manual test cases specifically designed for the described testing scenario.

- SmartWait: Automatically pauses execution until all essential on-screen elements are detected and fully loaded before proceeding with the test.

- Self-healing: Automatically repairs broken element locators and incorporates these updated locators into subsequent test runs, thereby removing the burden of maintenance.

- Visual testing: Indicates if a screenshot will be taken during test execution using Katalon Studio, then assesses the outcomes using Katalon TestOps. AI is used to identify significant alterations in UI layout and text content, minimizing false positive results and focusing on meaningful changes for human users.

- Test failure analysis: Automatically classifies failed test cases based on the underlying cause and suggests appropriate actions.

- Test flakiness: Understands the pattern of status changes from a test execution history and calculates the test's flakiness.

- Image locator for web and mobile app tests (Katalon Studio): Finds UI elements based on their visual appearance instead of relying on object attributes.

- Web service anomalies detection (TestOps): Identifies APIs with abnormal performance.

With that traction, Katalon strives to reach an autonomous future where teams can build and deploy at unprecedented efficiency.

Experience Autonomous Testing With Katalon