What is Cross Browser Testing? Definition, Process, Best Practices

What is Cross Browser Testing?

Cross-browser testing is a type of software testing where testers assess the compatibility and functionality of a website or web application across various web browsers, platforms, and versions. It rose from the inherent differences in popular web browsers (such as Chrome, Firefox, or Safari) in terms of their rendering engines, HTML/CSS support, JavaScript interpretation, and performance characteristics, leading to inconsistencies in user experience.

Cross browser testing gives insights for developers to eliminate that inconsistency and bring a standardized experience, no matter what browsers the users choose to access their website/web application.

A common misconception about cross browser testing is that it only involves testing on a few different browsers, while in reality it is a much more comprehensive term if we take into account the number of versions of those browsers, operating systems, and devices. A different combination of them provides a different experience. At the time of this article, there are:

- 9000+ distinct devices

- 21 different operating systems (including older versions)

- 6 major browser engines (Blink, WebKit, Gecko, Trident, EdgeHTML, Chromium) powering thousands of browsers (Google Chrome, Mozilla Firefox, Apple Safari, Microsoft Edge, Opera, etc.)

Together, they create about 63000+ possible browser - device - OS combinations that testers must consider when performing cross browser testing. The sheer number of combinations to be tested makes full browser coverage quite an ambitious task, so QA professionals tend to limit their tests to only the combinations most relevant to their business. Some companies employ automation testing to expand their browser coverage, which involves leveraging top automation testing tools.

In this article, we will delve into the concepts of cross-browser testing, explore its process, and discover how QA professionals can overcome challenges in cross-browser testing.

Why is Cross Browser Testing Important?

As we have demonstrated above, there are currently thousands of possible browser - device - OS combinations that make web browsing an incredibly diverse and personalized experience. Many businesses only test their website on Google Chrome, but Chrome only accounts for 63.45% of the browser market share as of Apr 2023, so we risk not testing the web experience of nearly 40% of the users. Users can access your websites using many other browsers/devices, and without cross browser testing, we don’t know if the website functions consistently on them.

There are many ways browsers can impact the web experience:

- Rendering Differences: Browsers interpret HTML and CSS rules differently, leading to variations in the rendering of web pages. For instance, one browser can display a particular font or element slightly larger or smaller than another browser, causing misalignments or inconsistent layouts.

- JavaScript Compatibility: Some browsers lack support for certain JavaScript APIs. Some functionalities may work flawlessly in one browser but encounter errors or fail to work as expected in another. Developers can uncover compatibility issues and implement workarounds or alternative approaches to ensure consistent behavior.

- Performance Variations: Browsers differ in terms of performance, how they handle rendering, execute JavaScript, and manage memory. A website that performs well in one browser can experience slow loading times in another browser.

There are many potential issues that can occur without cross browser testing. Below are some examples of them, and it could be happening without you knowing:

- Dropdown menus fail to display correctly in certain browsers.

- Video or audio content does not play in specific browser versions.

- Hover effects or tooltips do not function as expected.

- The website layout appears distorted or broken on mobile devices.

- JavaScript animations or transitions do not work smoothly in some browsers.

- Page elements overlap or are misaligned in certain browser resolutions.

- Clicking on a button or link does not trigger the intended action in a specific browser.

- Background images fail to load or appear distorted in certain browsers.

- CSS gradients or shadows are rendered differently, affecting the visual appearance.

- Web fonts do not render correctly or show fallback fonts in specific browsers.

- Media queries or responsive design features do not adapt properly across different browsers.

- Web application functionalities, like drag-and-drop or file uploads, do not work in specific browsers.

What To Test In Cross Browser Testing?

The QA team needs to prepare the list of items they want to check in their cross browser compatibility testing.

- Base Functionality: to verify if the core features of the website are still functioning as expected across browsers. Important features to add in the test plan include:

- Navigation: test the navigation menu, links, and buttons to ensure they lead to the correct pages and sections of the website.

- Forms and Inputs: test the validation of forms, input fields, submission, or error handling

- Search Functionality: test if the Search feature returns expected results

- User Registration and Login: test the account registration process happens with no friction, and if the account verification emails are sent to the right place.

- Web-specific Functionalities: test if web-specific features (such as eCommerce product features or SaaS features) function as expected

- Third-party integrations: test the functionality and data exchange between the website and third-party services or APIs

- Design: to verify if the visual aspect of the website is consistent across browsers. Testers usually leverage visual testing tools and the pixel-based comparison approach to identify discrepancies in layouts, fonts, and other visual elements on many browsers

- Accessibility: to verify if the website’s assistive technology is friendly with physically challenged users. Accessibility testing involves examining if the web elements can be accessed via the keyboard solely, if the color contrast is acceptable, if the alt text is fully available, etc.

- Responsiveness: to verify if the screen resolution affects layout. With the mobile first update, the need for responsive design is more important than ever since it is among major Google ranking factors. Web developers and testers therefore must pay attention to the resolutions of predominant browsers/devices their customers use and develop suitable testing strategies in the plan.

Start Cross Browser Testing Now

Who Will Do Cross Browser Testing?

Considering the variety of browsers, versions, and platforms available, it is a daunting task for any tester to do the browser selection. More importantly, testers usually don’t have access to the right data to make this decision; instead, it is the client, business analysis team, and marketing teams that play a significant role.

Companies must collect usage and traffic data to identify the most commonly used browsers, environments, and devices. The testing team only serves as an advisor in this phase, and once the final choice has been made they can start evaluating the application's performance across multiple browsers.

Once the testing has been done and defects are found, the design and development teams interpret the results and make respective adjustments on the visual elements and the code base.

When To Do Cross Browser Testing?

Cross browser testing should be performed continuously along the development roadmap to ensure the proper functioning of new features and prevent any disruptions in previously functional elements caused by new code additions (also known as regression testing).

Delaying cross browser testing until the project's end really escalates the complexity and cost of addressing any identified issues compared to resolving them incrementally during development. Acknowledging this, many QA teams have adopted the shift left testing strategy to essentially integrate all testing activities into development workflows, facilitating more comprehensive quality management.

How To Do Cross Browser Testing?

The cross browser testing and bug fixing workflow for a project can be roughly divided into 6 following phases (which is in fact the Software Testing Life Cycle that can be applied to any type of testing):

1. Requirement Analysis

2. Test Planning

3. Environment Setup

4. Test Case Development

5. Test Execution

6. Test Cycle Closure

1. Plan For Cross Browser Testing

During the initial planning phase, many discussions with the client or the business analyst occur to determine precisely what aspects to be tested. All of the requirements for testing along with detailed information on availability of resources and schedules are outlined in a test plan. It's ideal if we can test the entire application on all browsers, but be mindful of time and cost limitations. One effective strategy is to conduct 100% testing on 1 major browser, then test only the most critical and commonly used features for other browsers.

Once the required feature set and preferred technologies are identified, we can start to analyze the target audience's browsing habits, devices, and other relevant factors. Existing client data or external sources such as competitor usage statistics and geographic considerations can aid in this assessment.

Let’s consider an eCommerce site catering to North American customers. Based on the data collected from traffic analytics, this website should:

- Seamlessly operate on the latest versions of popular desktop and mobile browsers (Chrome, Firefox, Edge, Safari, and Opera)

- Provide acceptable experience on IE 8 and 9, which provide a certain percentage of traffic

- Comply with WCAG AA accessibility standards

Having determined the target testing platforms, it is necessary to revisit the feature requirements and technology choices as outlined in the test plan.

2. Choose Between Manual Testing vs Automation Testing

We can manually test the website on different browsers simply by opening up the desired browser and accessing the web from there. Testers have to repeat the test cases on several browsers to see how the application behaves and report any bugs they find. Despite being a straightforward method, manual testing is prone to human errors and time-consuming. In the long run, manual cross browser testing is not scalable.

Automated cross browser testing involves using tools to create and execute repetitive test cases to improve test consistency, efficiency, and accuracy. QA teams have 2 options between build vs buy: either they build a fully customized tool in-house from scratch or buy a tool from a vendor. No matter which option they choose, a good cross browser testing tool should:

- Offer a Virtual Private Network (VPN) that allows you to connect to remote machines and assess the performance of your JAVA, AJAX, HTML, Flash, and other pages

- Provide screenshots to show how pages and links appear across different browsers, capturing their static presentation (please note that this is more of a visual testing feature than cross browser testing). In other words, the tool should have functional testing and UI testing built in.

- Support a wide range of browsers and their versions

- Support a wide range of screen resolutions

- Support both web and mobile applications, including private pages that require authentication and local pages within a private network or firewall

The decision on if your team should choose manual testing vs automated testing depends on the specific test project. For the sake of simplicity, you should only automate repetitive tests and manually perform ad-hoc, exploratory, usability tests.

3. Setup Test Environment

Environment setup is a huge challenge for cross browser testers because investing in physical machines is extremely costly. You need to have at least 1 Windows PC, Mac computer, Linux machine, iPhone, Android phone, iPad, Android tablet, and many other IoT devices if your product provides such integrations. Then consider the fact that you have to test not only the current but also many older versions of each of those machines, and the resources required to maintain the systems and keep them running. Managing test cases and test results on so many devices is a struggle when you have no centralized system connecting such different machines.

Testers need to consider several better approaches, and the 2 most common are:

- Emulators, simulators, or virtual machines (VMs) with installed browsers: these solutions essentially simulate the system you want to test on, and you don’t have to spend on physical machines. Although this approach is affordable, its scalability is limited, and test results on virtual mobile platforms (Android and iOS) may not be completely reliable.

- Remote Access Services: testers can use remote access services to connect to real devices to perform cross-browser testing without having the devices physically present. Testers can have almost complete control and customization over the additional tools and frameworks they want to install when using these services.

- Cloud-based Testing Platforms: testers can sign up for platforms that provide a wide range of browsers and operating system combinations already configured for them to begin testing right away. These platforms usually come with automation capabilities, collaboration, and managed infrastructure, so testers can offload their responsibilities and focus on critical testing tasks.

4. Develop Test Cases

For manual testing, testers can leverage AI-powered test case generation features in Katalon with JIRA integration. With the help of ChatGPT, we can streamline the process of creating test cases by automating the generation of well-organized, comprehensive, and accurate test cases using natural language inputs. This improves efficiency, reduces the need for manual work, and enhances the overall test case development process.

After integrating Katalon with JIRA, installing the Katalon - Test Automation for JIRA plugin, and configuring the API key, testers should see a “Katalon manual test cases” button in their JIRA ticket. As you click on this button, the Katalon Manual Test Case Generator will process your input, analyze the ticket title/description, and provide detailed manual test cases for you.

As you can see below, Katalon has generated 10 manual test cases for you within seconds, and you can easily save these test cases to the Katalon Test Management system, which helps you track their status in real-time.

For automation testing, you can leverage the Built-in Keywords and Record-and-Playback. The Built-in keywords are essentially prewritten code snippets that you can drag and drop to structure a full test case without having to write any code, while the Record-and-Playback simply records the actions on your screen and then turns those actions into an automated test script which you can re-execute on any browsers or devices you want.

5. Execute The Tests

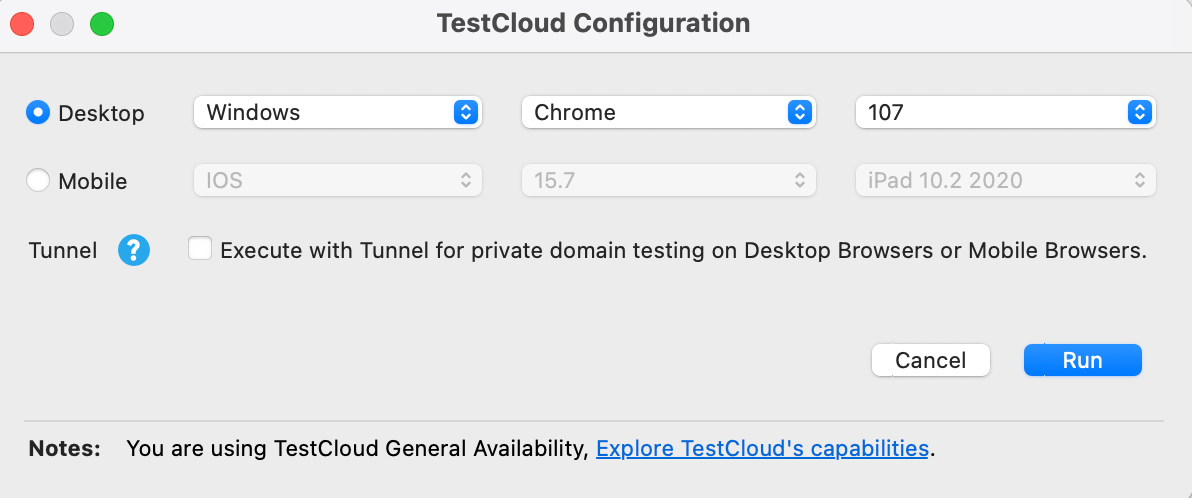

If testers go for manual testing, they can simply open the browser and run the tests they have planned out, then record the results manually. If they choose automation testing, they can configure the environment they want to execute on then run the tests. In Katalon TestCloud, after constructing a test script, testers can easily select the specific combination they want to run the tests on.

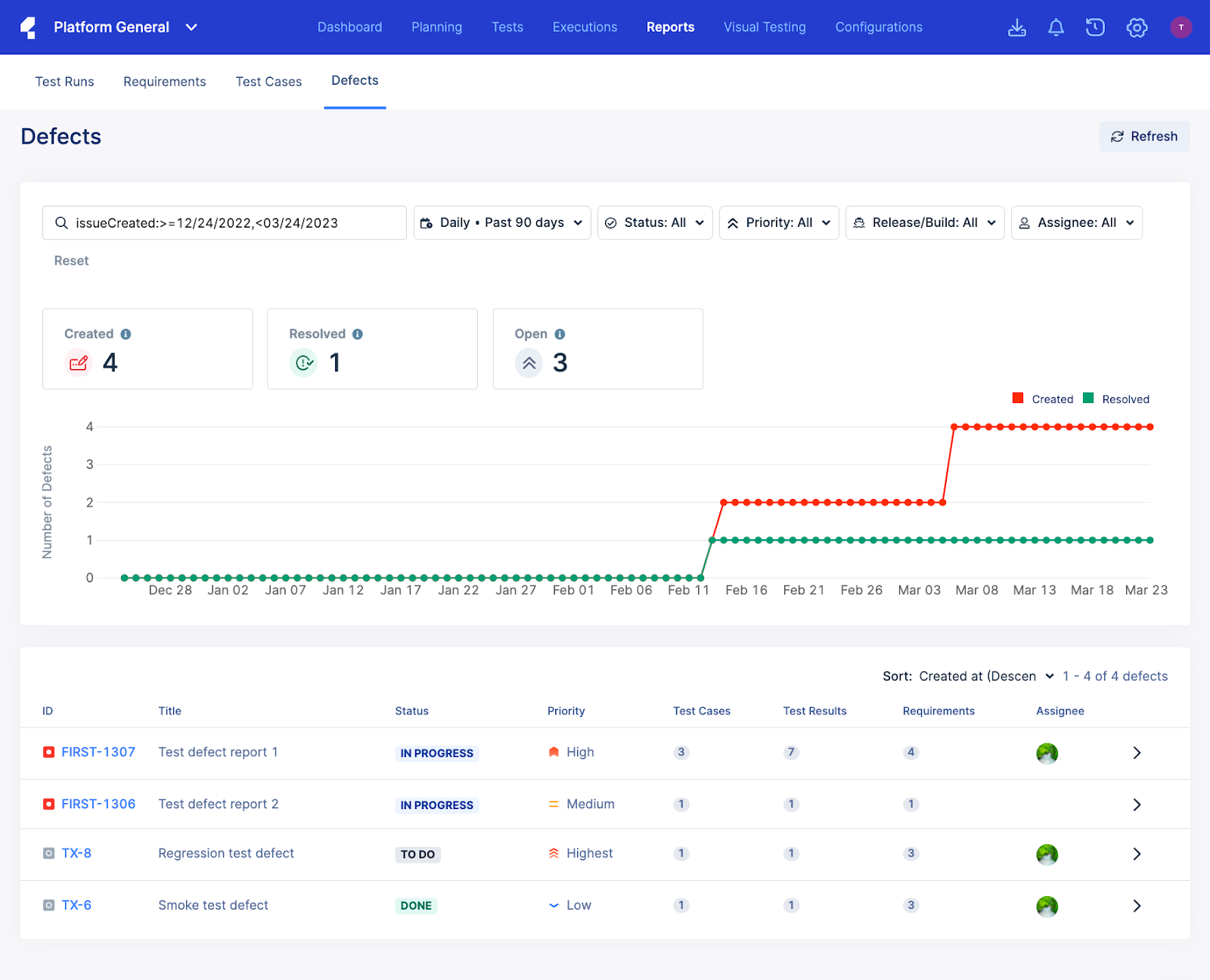

6. Report The Defects And Document Results

Finally, testers return the results for the development and design team to start troubleshooting. After the development team has fixed the bug, the testing team must re-execute their tests to confirm that the bug has indeed been fixed. These results should be carefully documented for future reference and analysis.

Try Cross Browser Testing With Katalon Free Trial