Mastering Prompt Engineering: Unleashing the full potential of StudioAssist in Katalon Studio

In the world of test automation, tools like Katalon Studio have revolutionized how we approach software testing. But what if we could take it a step further? Enter StudioAssist, a next-generation AI-powered coding companion feature designed to elevate your programming experience within Katalon Studio. Leveraging the power of Generative Pre-trained Transformers (GPT) and the robust foundation of Katalon Studio, StudioAssist doesn't just assist; it empowers. From context-based code suggestions (to create scripts or custom keywords) to detailed descriptions of your existing code, this tool is a game-changer in the time taken to create stable automation scripts.

For those familiar with AI-powered tools like ChatGPT, Bard, or other LLM-based solutions, you understand that the output quality is deeply correlated with the input question or prompt. Since StudioAssist is also fueled by GPT technology, efficient, prompt engineering will generate more accurate test script code.

Our aim with this article is to provide a comprehensive guide on harnessing the power of prompt engineering, particularly within Katalon Studio. We believe that understanding and effectively using StudioAssist can considerably reduce your test generation efforts.

The Emergence of Prompt Engineering

What is a Prompt?

In its simplest form, a prompt is the text you feed into an AI model during an interaction. Think of it as the question or request that initiates an AI's response. In the context of StudioAssist, a prompt could be a specific scenario or set of instructions related to recreating test steps in a test script. The crux of prompt engineering revolves around optimizing these prompts to get the most accurate and helpful responses.

The Intricacies of Blind Prompts

Many users initially engage with AI models like OpenAI's GPT in a "chat mode," utilizing what is often referred to as a "blind prompt." These are open-ended questions or commands that typically yield a generic response. While it's convenient to ask a question and get an answer, the specificity and context you provide in your prompt can significantly influence the quality of the response.

Challenge: The Reliability Factor

One common challenge with blind prompts is their inherent unreliability. Users often find that the response received may not align with their expectations, either being too vague or entirely off-topic. This lack of reliability can become even more apparent when the same question is posed in different contexts, leading to varied results. Hence, using blind prompts without a comprehensive understanding of prompt crafting could lead to undesirable or unreliable outcomes.

The Essence of Prompt Engineering

So, what exactly is prompt engineering? It is the deliberate process of crafting prompts to elicit reliable, useful, and contextually relevant responses from an AI model. It is a mechanism to bring a degree of reliability and specificity to an otherwise unpredictable interaction with AI.

Why is Prompt Engineering Important?

One compelling reason lies in the relative pace of innovation in machine learning and software technologies. As these technologies evolve at breakneck speeds, understanding how to interact effectively with them becomes crucial. In the context of StudioAssist, effectively engineered prompts can yield more targeted and actionable test scripts, thereby maximizing the tool's utility.

Prompting Best Practices

Creating effective prompts is a nuanced art, and mastering it can make all the difference in how you interact with AI-powered tools like StudioAssist. In light of this, we present to you the five pillars of prompting—best practices that provide a foundational framework for generating robust and reliable prompts.

1. Provide Examples

Begin by integrating examples into your prompt. Offering a diverse set of examples can serve dual purposes: it clarifies your intent and frees the AI from any creative constraints it might otherwise have. The idea is to show the AI the kind of output you're expecting, thereby leading to a more targeted response.

2. Give Directions for Formatting Responses

Clarifying your desired output format can further tailor the AI's response to your needs. For instance, if you're looking for code snippets, you could specify the programming language or the style you prefer. Clear directions for formatting can greatly influence the precision of the AI's output.

3. Evaluate Quality

This pillar underscores the importance of iteration in improving prompt reliability. As you create and test prompts, you'll want to run the exact prompt multiple times to check for any inconsistencies or errors. Evaluating the quality of responses over several iterations helps you refine your prompts for greater reliability.

A quick note on "hallucinations": in the context of GPT, a hallucination refers to a response generated by the AI that is either factually incorrect or inconsistent with the given prompt. Being aware of these hallucinations is key to evaluating the quality of a prompt's response.

4. Maintain a Dynamic Interaction

StudioAssist is designed to mimic the back-and-forth dynamic that users experience with other GPT-based tools like ChatGPT. This pillar encourages users to keep that conversational engagement with the AI, as it enhances the scope for clarification and fine-tuning prompts in real time.

Understanding and employing these practices can significantly enhance your ability to generate effective prompts. It's a structured approach that builds a solid foundation for anyone looking to explore the full scope of StudioAssist's capabilities, ultimately leading to more efficient and reliable test automation.

Prompt Engineering within StudioAssist in Katalon Studio

So, how can we apply all of these principles in practice using StudioAssist within Katalon Studio? To illustrate, we'll walk through the process of creating a test automation script for an online retailer, using shop.polymer-project.org as our test subject. We'll then craft an initial prompt in StudioAssist to evaluate the website, and apply the best practices discussed earlier to refine the prompt for a more accurate test script. Let's get started.

For the purposes of this example, we will assume that you are already familiar with Katalon Studio and all the necessary steps to set up the test environment.

Once we are in the script mode in Katalon Studio, we will provide the following prompt:

/*

* URL: https://shop.polymer-project.org

* As a customer, I want to be able to add items to my cart on the website so that I can purchase them later.

*/

/*

* Create a Test Case with the name 'Display list of available products - 1a'

* Navigate to homepage

* Select a product category 'Mens Outerwear'

* Verify list of available products is displayed

* Click on a product

* Select size

* Select quantity

* Click on 'Add to Cart' button

* Click on Ladies Outerwear link

* Click on Cart icon

* Click on 'Checkout' button

*/

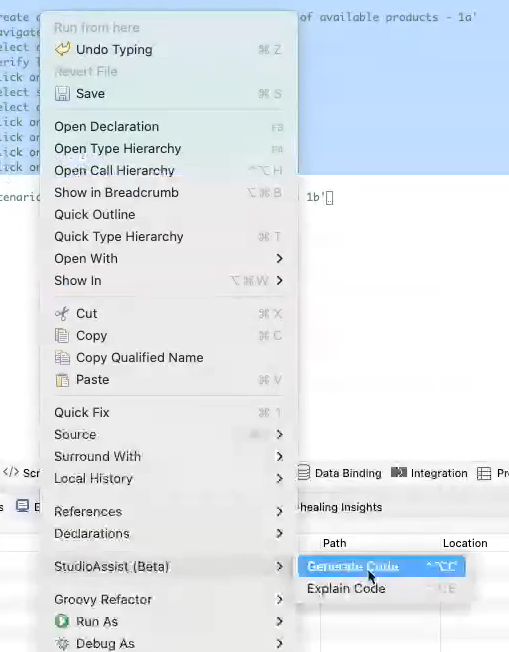

Once the prompt is in, right-click on the scripting space and select StudioAssist (Beta) -> Generate Code.

After a few moments, StudioAssist will provide the following results:

Let's analyze the results. The prompt successfully navigates to the URL as expected, but it introduces an extra step: a 10-second wait for the page to load. This additional step is a prime example of a 'hallucination,' as it was not specified in the original prompt.

Up to this point, the step we executed can be classified as a blind prompt. Since it serves as our baseline, we can use its outcomes to craft a more refined prompt that aligns more closely with our expected results.

Now let’s modify the prompt. Remember, the answer you will get from any AI model will be as good as the prompt you provide. In this case, we will increase the level of detail in the prompt. Here is the new version of the prompt we will use:

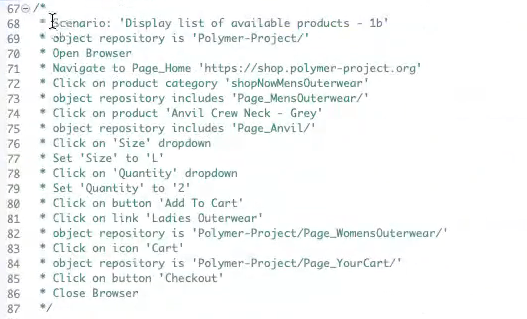

/*

* Scenario: 'Display list of available products - 1b'

* object repository is 'Polymer-Project/'

* Open Browser

* Navigate to Page_Home 'https://shop.polymer-project.org'

* Click on product category 'shopNowMensOuterwear'

* object repository includes 'Page_MensOuterwear/'

* Click on product 'Anvil Crew Neck - Grey'

* object repository includes 'Page_Anvil/'

* Click on 'Size' dropdown

* Set 'Size' to 'L'

* Click on 'Quantity' dropdown

* Set 'Quantity' to '2'

* Click on button 'Add To Cart'

* Click on link 'Ladies Outerwear'

* object repository is 'Polymer-Project/Page_WomensOuterwear/'

* Click on icon 'Cart'

* object repository is 'Polymer-Project/Page_YourCart/'

* Click on button 'Checkout'

* Close Browser

*/

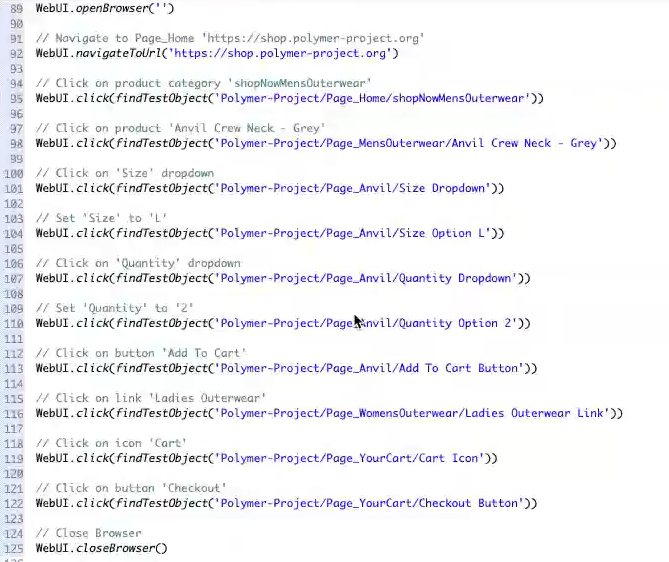

We will perform the same steps to instruct StudioAssist to create the script, and here is the result we obtained:

This prompt is considerably more detailed than the original, inherently providing broader test coverage while minimizing the occurrence of hallucinations in the script. Consequently, we can expect a more rigorously tested application in Katalon Studio.

Wrapping up!

This is just the beginning. While Katalon Studio’s StudioAssist technically focuses on creating and explaining code, the use cases expand exponentially when you apply prompt engineering best practices to your test authoring process. Future installments of this article series will dive into specific use cases like generating functions, crafting data sets, and formulating regular expressions, among others.

The more proficient you become at prompt engineering, the greater the value you'll extract from StudioAssist. Thank you for joining us in exploring this vital subject. Stay tuned for more in-depth coverage and practical examples!

Download Katalon Studio for Free